Extended Filesystem being the default file system in Linux, we will be focusing ext file system in this article to understand super blocks.

Before we get to understanding Super blocks in a file system, let’s understand some common terminologies and building blocks of a file system.

Blocks in File System

When a partition or disk is formatted, the sectors in the hardisk is first divided into small groups. This groups of sectors is called as blocks. The block size is something that can be specified when a user formats a partition using the command line parameters available.

1

| mkfs -t ext3 -b 4096 /dev/sda1 |

In the above command we have specified block size while formatting /dev/sda1 partition. The size specified is in bytes. So basically one block will be of 4096 bytes.

Block Size for Ext2 can be 1Kb, 2Kb, 4Kb, 8Kb

Block Size for Ext3 can be 1Kb, 2Kb, 4Kb, 8Kb

Block Size for Ext4 can be 1Kb to 64Kb

The block size you select will impact the following things

- Maximum File Size

- Maximum File System Size

- Performance

The reason block size has an impact on performance is because, the file system driver sends block size ranges to the underlying drive, while reading and writing things to file system. Just imagine if you have a large file, reading smaller blocks (which combined together makes the file size) one by one will take longer. So the basic idea is to keep bigger block size, if your intention is to store large files on the file system.

Less IOPS will be performed if you have larger block size for your file system.

Related: Monitoring IO in Linux

And if you are willing to store smaller files on the file system, its better to go with smaller block size as it will save a lot of disk space and also from performance perspective.

Hard disk sector is nothing but a basic storage unit of the drive, which can be addressed. Most physical drives have a sector size of 512 bytes. Please keep the fact in mind that hard disk sector is the property of the drive. Block size of file systems (that we just discussed above) is a software construct, and is not to be confused with the hard disk sector.A linux Kernal performs all its operations on a file system using block size of the file system. The main important thing to understand here is that the block size can never be smaller than the hard disk's sector size, and will always be in multiple of the hard disk sector size. The linux Kernel also requires the file system block size to be smaller or equal to the system page size.Linux system page size can be checked by using the below command.

1

2

| root@localhost:~# getconf PAGE_SIZE4096 |

Block Groups in File System

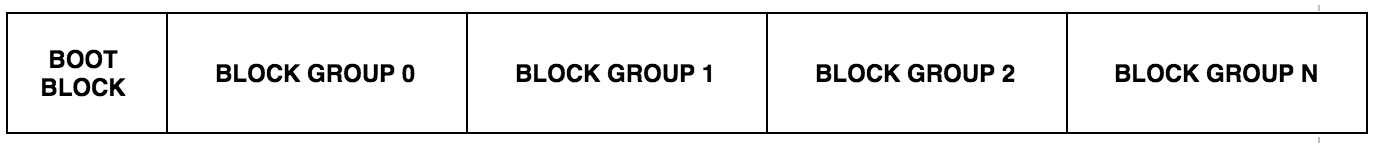

The

blocks that we discussed in the previous section are further grouped

together to form block groups for ease of access during read and writes.

This is primarily done to reduce the amount of time taken while reading

or writing large amounts of data.

The

ext file system divides the entire space of the partition to equal

sized block groups(these block groups are arranged one after the other

in a sequential manner).

A typical partition layout looks something like the below at a very high level.

Number of blocks per group is fixed, and cannot be changed. Generally the number of blocks per block groups is 8*block size.

Lets see an output of mke2fs command, that displays few of the information that we discussed till now.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| root@localhost:~# mke2fs /dev/xvdfmke2fs 1.42.9 (4-Feb-2014)Filesystem label=OS type: LinuxBlock size=4096 (log=2)Fragment size=4096 (log=2)Stride=0 blocks, Stripe width=0 blocks6553600 inodes, 26214400 blocks1310720 blocks (5.00%) reserved for the super userFirst data block=0Maximum filesystem blocks=4294967296800 block groups32768 blocks per group, 32768 fragments per group8192 inodes per groupSuperblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208, 4096000, 7962624, 11239424, 20480000, 23887872Allocating group tables: done Writing inode tables: done Writing superblocks and filesystem accounting information: done |

If you see the above output, it gives you the below details:

- Block Size (4096 bytes)

- 800 Block Groups

- 32768 Blocks per group (which is 8*4096, as mentioned earlier)

It also shows the superblock backup locations on the partition. That is the block groups where superblock backups are stored.

What is File System Superblock?

The

most simplest definition of Superblock is that, its the metadata of the

file system. Similar to how i-nodes stores metadata of files,

Superblocks store metadata of the file system. As it stores critical

information about the file system, preventing corruption of superblocks

is of utmost importance.

If the superblock of a file system is corrupted, then you will face issues while mounting that file system. The system verifies and modifies superblock each time you mount the file system.

Superblocks

also stores configuration of the file system. Some higher level details

that is stored in superblock is mentioned below.

- Blocks in the file system

- No of free blocks in the file system

- Inodes per block group

- Blocks per block group

- No of times the file system was mounted since last fsck.

- Mount time

- UUID of the file system

- Write time

- File System State (ie: was it cleanly unounted, errors detected etc)

- The file system type etc(ie: whether its ext2,3 or 4).

- The operating system in which the file system was formatted

The primary copy of superblock is stored in the very first block group. This is called primary superblock, because this is the superblock that is read by the system when you mount the file system. As block groups are counted from 0, we can say that the primary superblock is stored at the beginning of block group 0.As superblock is a very critical component of the file system, a backup redundant copy is placed at each "block group".In other words, every "block group" in the file system will have the backup superblock. This is basically done to recover the superblock if the primary one gets corrupted.

You

can easily imagine that storing backup copies of superblock in every

"block group", can consume a considerable amount of file system storage

space. Due to this very reason, later versions implemented a feature

called "sparse_super" which basically stores backup

superblocks only on block groups 0, 1 and powers of 3,5,7. This option

is by default enabled in latest system's, due to which you will see

backup copies of superblock only on several block groups(which is

evident from the mke2fs output shown in the previous section).

How to view Superblock Information of a File System?

You can view superblock information of an existing file system using dumpe2fs command as shown below.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

| root@localhost:~# dumpe2fs -h /dev/xvda1dumpe2fs 1.42.9 (4-Feb-2014)Filesystem volume name: cloudimg-rootfsLast mounted on: /Filesystem UUID: f75f9307-27dc-4af8-87b7-f414c0fe280fFilesystem magic number: 0xEF53Filesystem revision #: 1 (dynamic)Filesystem features: has_journal ext_attr resize_inode dir_index filetype needs_recovery extent flex_bg sparse_super large_file huge_file uninit_bg dir_nlink extra_isizeFilesystem flags: signed_directory_hashDefault mount options: (none)Filesystem state: cleanErrors behavior: ContinueFilesystem OS type: LinuxInode count: 6553600Block count: 26212055Reserved block count: 1069295Free blocks: 20083290Free inodes: 6470905First block: 0Block size: 4096Fragment size: 4096Reserved GDT blocks: 505Blocks per group: 32768Fragments per group: 32768Inodes per group: 8192Inode blocks per group: 512Flex block group size: 16Filesystem created: Sat Sep 27 13:05:57 2014Last mount time: Mon Feb 2 14:43:31 2015Last write time: Sat Sep 27 13:06:55 2014Mount count: 4Maximum mount count: 20Last checked: Sat Sep 27 13:05:57 2014Check interval: 15552000 (6 months)Next check after: Thu Mar 26 13:05:57 2015Lifetime writes: 305 GBReserved blocks uid: 0 (user root)Reserved blocks gid: 0 (group root)First inode: 11Inode size: 256Required extra isize: 28Desired extra isize: 28Journal inode: 8First orphan inode: 396056Default directory hash: half_md4Directory Hash Seed: 2124542b-ea2f-4552-afaa-c5720283d2cdJournal backup: inode blocksJournal features: journal_incompat_revokeJournal size: 128MJournal length: 32768Journal sequence: 0x0151d29dJournal start: 11415<span style="font-size:16px;"></span> |

You can also view the exact locations of superblock and backups using the same dumpe2fs command as shown below.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| root@localhost:~# dumpe2fs /dev/xvda1 | grep -i superblockdumpe2fs 1.42.9 (4-Feb-2014) Primary superblock at 0, Group descriptors at 1-7 Backup superblock at 32768, Group descriptors at 32769-32775 Backup superblock at 98304, Group descriptors at 98305-98311 Backup superblock at 163840, Group descriptors at 163841-163847 Backup superblock at 229376, Group descriptors at 229377-229383 Backup superblock at 294912, Group descriptors at 294913-294919 Backup superblock at 819200, Group descriptors at 819201-819207 Backup superblock at 884736, Group descriptors at 884737-884743 Backup superblock at 1605632, Group descriptors at 1605633-1605639 Backup superblock at 2654208, Group descriptors at 2654209-2654215 Backup superblock at 4096000, Group descriptors at 4096001-4096007 Backup superblock at 7962624, Group descriptors at 7962625-7962631 Backup superblock at 11239424, Group descriptors at 11239425-11239431 Backup superblock at 20480000, Group descriptors at 20480001-20480007 Backup superblock at 23887872, Group descriptors at 23887873-23887879 |

How can I use backup superblocks to recover a corrupted file system?

The first thing to do is to do a file system check using fsck utility. This is as simple as running fsck command against your required file system as shown below.

1

| root@localhost:~# fsck.ext3 -v /dev/xvda1 |

If fsck output shows superblock read errors, you can do the below to fix this problem.

First

step is to Identify where the backup superblocks are located. This can

be done by the earlier shown method of using dumpe2fs command OR using

the below command also you can find the backup superblock locations.

1

| root@localhost:~# mke2fs -n /dev/xvda1 |

-n

option used with mke2fs in the above example, will show the backup

superblock locations, without creating an file system. Read mke2fs man

page for more information on this command line switch.

Second step is to simply restore the backup copy of superblock using e2fsck command as shown below.

1

| root@localhost:~# e2fsck -b 32768 /dev/xvda1 |

In

the above shown example the number 32768 i have used is the location of

the first backup copy of the superblock. Once the above command

succeeds, you can retry mounting the file system.

Alternatively you can also use sb option available in mount command. sb option lets you specify the superblock to use while mounting the file system. As mentioned earlier in the article, when you mount a file system, by default the primary superblock is read. Instead you can force mount command to read a backup superblock in case the primary one is corrupted. Below shown is an example mount command using a backup superblock to mount a file system.

1

| root@localhost:~# mount -o -sb=98304 /dev/xvda1 /data |

The above shown mount command will use backup superblock located at block 98304 while mounting.

Comments

Post a Comment